In this article, I'll discuss how principles of BDD can be used in Outside-In development. I'll begin with how features are defined in a Functional Specification and then brought into a tool to use in the development process. I'll discuss how tests can be created based on those same feature definitions and then used as a starting point for building the application.

Functional Specifications

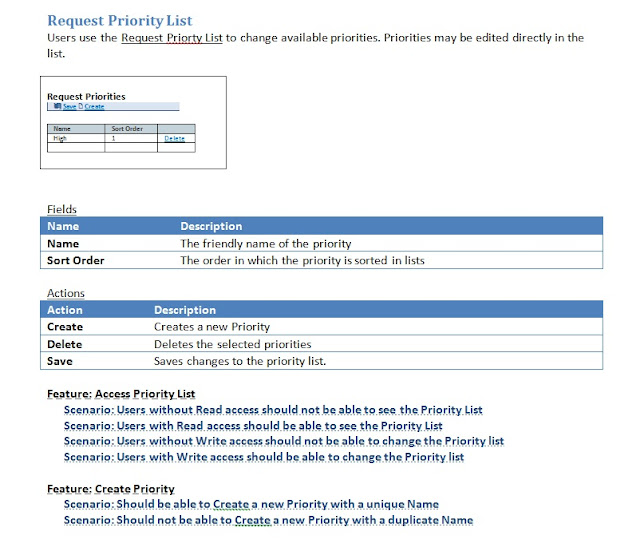

These days, I like to express specifications using the Gherkin syntax, somewhat. This syntax expresses the features of an application with feature and scenario statements. When I put specs together, I'll often include a UI mock up and possibly some definitions along with these scenario statements. Thus, my specs will look something like this:

The Gherkin portion is the "Feature:" and "Scenario:" statements.

Features represent specific functionality in the application. They should generally represent a single unit of work relevant to the user. Features should not be as granualar as "select something from a dropdown list", nor should they be as coarse as "Order Entry Module." They should generally be a distinct task, such as "Create a new Sales Order" or "Add an Item to a Sales Order."

Scenarios represent permutations of a feature based on the state of the system. You would express normal, adnormal and exception circumstances as in any test. When defining scenarios, you should be comprehensive. Features and scenarios are not only the specifications for the system, but also the tests that you will create for the system and even the its documentation. In addition, because features and scenarios are expressed in a way that the user will understand, they can survive the development cycle to be used in the next iteration of the application.

Let's look at a sample feature, then:

In order to ensure that deleting an Account Type does not impact any Customers

As an Account Administrator

I cannot Delete an Account Type if there is it assigned to a Customer

Scenario: Should warn user when there

are Customers of the Account

Type when Deleting the Account Type

Scenario: Should warn user when there is an error when Deleting the Account

Type

Scenario: Should Delete an Account Type when there are no Customers of

the Account Type when Deleting the Account Type

This feature simply states a business rule that Account Types cannot be deleted if the account type is associated with any customers. It's simple enough to understand that there are account types for customers, so you can't very well go deleting account types without dealing with those customers. The data integrity rules must not allow a null account type for a customer and we would rather avoid a nasty referential integrity error. So, we have a few basic scenarios that might occur and that we need to test for. Any business user would understand this, although we are not explicit about what "warn user" means. That's an implementation details that does not necessary involve the feature (although, it could if we wanted to specify it.)

Of course, this feature will not exist all by itself. There's not enough here to define an application. We can say, however, that there must be "Account Types" and there must be "Customers" and a "Customer" has a property that references an "Account Type" and that property cannot be null. In the context of the entire application, there will be other features that define forms and other domain objects and how they interact. The feature above merely serves as an example. You would have other features, such as:

Feature: Customers can be Searched by Name

Feature: Customers can be Searched by Number

Feature: Customers can be Created

Feature: Customers can be Deleted

Feature: Customers can be Changed

Feature: Account Types can be Created

Feature: Account Types can be Deleted

These features may elicit the response "duh." Yet, as every developer knows, they still must be built and they must be tested. So, we layout every little feature along with the various scenarios needed to validate that it will work property, according to the needs of the business. Such is a functional specification.

Mock Applications

The normal method of going from functional specs to development is to start laying out the designs needed to implement the specs. I used to use a lot of UML to do this, but I think there is now a better way. If I am building an application with a graphical user interface, my functional specs will have mockups of all the UIs that I am going to build. It does not matter how these mocked UIs are created, all that matters is that I've clearly defined what the user is going to get. So, thinking along the lines of building Outside-In, I can start with the UI, right? Well, I could, but I'd run into a significant testing hurdle.Automated tests are not easily created for UIs. That's one of the reasons that we have all those "MV" patterns: MVP, MVC, and MVVM. They all attempt to remove the business logic from the UI so that we can have more comprehensive testing (among other things.) I suggest, then, that you start not quite with the UI, but with the View (they all have a view definition, which is an interface.) I like the MVP pattern and will focus on that one in particular, but the principles apply to the others as well.

So, just like I demonstrated in Building Software Outside-In, we can create the interfaces for the view and the presenter just based on the specs. From there we can build the rest of the application using TDD methods. However, BDD features often describe interactions between views, and I do want to test not only the granular interaction (one form should call a method that's supposed to open another form) but also a somewhat more robust interaction (when one form opens another form the application state should change in a certain way.) I want more than what mocking tools provide. I want a mock application.

A "Mock Application" is only a "Mock" in that a person cannot really do anything with it because the UI is not really a UI. It exists for testing purposes only. Consider the intent of the "View" in the MVP (or any other) pattern. By its very nature it is supposed to have no functionality. True, it will have functionality relevant to the platform (managing state in ASP.NET, for example,) but that functionality has nothing to do with our application's true features. If you were to implement the view using a plain old class with nothing but properties, it could still function as the view layer of the application. You would need another application to actually perform actions in the application, and that is precisely what tests are for.

To build the view layer of an application, all you really need to do is implement the view interfaces and any infrastructure required by the platform to allow your views to interact. I like the Application Controller pattern for this, which is described fairly well in The Presenter in MVP Implementations. The Application Controller handles the navigation between forms, which can itself be abstracted behind transition objects so that the mechanics of opening a view (Response.Redirect, for example, or Form.Show) does not have to interfere with the application logic. Using an Application Controller can minimze the platform specific implementation to just the views and a few transition objects. The only other consideration is maintaining which view is active, although in many circumstances that is not even relevant.

The Process of BDD Tests

The Gerkin language specifies a syntax for Scenarios. This syntax is a set of statements that begin with "Given", "When" and "Then". They are directly related to the typical "Setup", "Execute" and "Validate" steps of a test. The logic goes as follows:Given that the application is in a certain state

When I perform some operation

Then certain things happen

These statements should be expressed in a way that is easily understood by the user base. They may be tedious at times, but they still make sense to everyone. So, from the functional specs, each scenario is further refined to express this level of detail. Consider one of our sample scenarios:

Scenario: Should warn user when there are Customers of the Account

Type when Deleting the Account Type

I might express the steps in the scenario as follows:Given that I have opened the Account Type List

And that I have selected an Account Type that is associated with a Customer

When I Delete that Account Type

Then I am warned that the Account Type cannot be Deleted

These are, in fact, the steps I need to take in code in order to test the scenario. If I use a tool like SpecFlow, then I can have individual, re-usable methods generated for each step that I can then implement.

Using BDD Tests and Mock Applications to Design and Build

How BDD works with SpecFlow is beyong the scope of this article. What I really want to discuss is the process of defining these tests, which came from a functional spec, so that you can build an application from the Outside-In. To be sure, in order for this to work, you must have done the following:1. Decided on the architecture and platform and build a foundation. This is typically the same old stuff you build for every application. It's a starting point and does not express any business functionality.

2. Built a testing infrastructure. This is important only in some circumstances. For example, if I want to use Entity Framework Code First development, it would really be helpful if I put together the the infrastructure to generate my database on the fly to SQL CE or something.

3. Created the functional specs - obstensibly, these would have been reviewed by and approved by the business unit the application is for.

You might flesh out all the scenarios before coding. That can be helpful to clarify how the application works. On the other hand, you might just as likely start with a few scenarios and work from there. That would fit nicely into an Agile process. Either way, once you have the groundwork done, you begin in the same way: Designing the View and Domain Objects.

One of BDD's intentions is to establish a vocabulary that is understood by both developers and the business unit. That's also integral to Domain Driven Development. You might have noticed my odd use of capitalization in the scenario definitions. I did this to highlight potential candidates for domain objects, properties and methods. I do not believe these will always be everything you need, but it will be the most important parts. Once you work through a few scenarios, you'll see what I mean. So, it should be fairly straightforward to define the domain objects, views and presenters you need to implement the scenarios you have defined. To be sure, at this point we are not saying you have completely defined any of this; you've just defined enough to complete a particular scenario of a particular feature - very Agile, I think.

Defining these three parts - the view, present and domain - is all you need to implement the step definitions to complete the BDD feature tests - but, you must implement them. Hmm...in order to implement the presenter, you need to define the service and in order to implement the service you need to defined the data access layer. Well, not exactly. We can simply use a mocking framework for the presenter and be done with it.

Well, I don't actually mean to be done with it because I want these tests to run the entire stack. Yet, I am building from Outside-In and I'd like to validate each layer as I go. I can use unit tests to do that, and I should write unit tests per typical TDD methodology, but those tests are granular. My BDD tests are defined as application level features. I want to include the Application Controller in these tests and let the presenters be properly instantiated. For me, that means created through a factory. It is, in fact, the power of factories that will allow me to run these BDD tests before I even implement my service (or design my data access layer, for that matter.)

Using Factories to Assist in Outside-In Development

Factories are important in a well designed application. Factories allow us to leverage Dependency Injection to accomplish Outside-In development. When we define each layer of our application (presentation, service and data access) we defined factories for those layers. To be sure, these are abstract factories as they are implemented behind an interface. In our mock application, we would implement factories that produce mock-ups of the layers we have not yet created. In this way, the tests can run and validate the work that we have done.Consider the following model for an abstract factory graph:

The Presenter Factory will require a Service Factory which will require a Data Access Factory. All this is wired together when the application starts. In our mock application, we simply create a mock up of the factory for the layers that we have not yet coded. So, if we are working on the Presenter, which is requried for the BDD tests, we can create mocks for the service layer factory and the service layer. In this way, our mocks will provide the expected results and allow our tests to run.

Once the presentation layer is complete and all tests pass, we move on to the service layer. Again, we would create a mock for the data access layer objects and factory which will allow our service layer to work. Our BDD tests should again pass, once the service layer is complete.